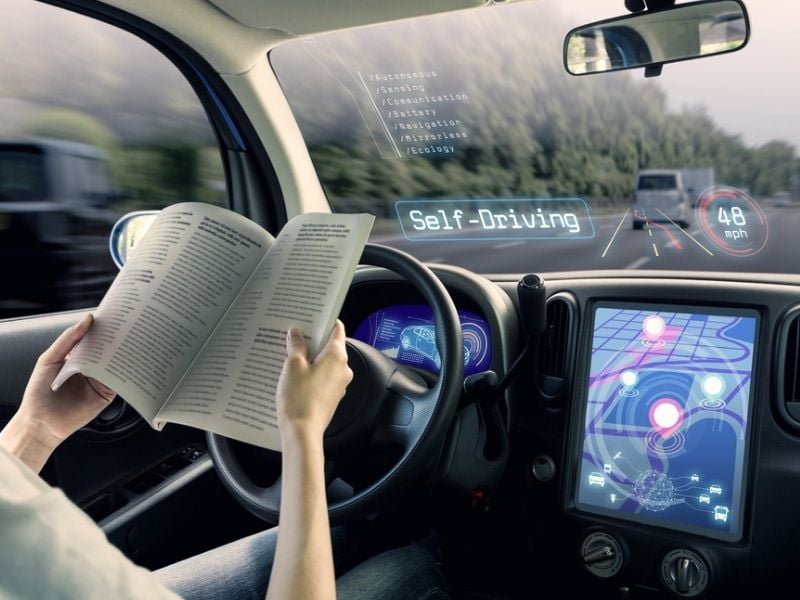

Self-driving cars are the vehicles of the future, but their implementation isn’t as simple as 1-2-3. There’s a large discussion taking place about their safety and the ethical challenges autonomous vehicles pose, especially after a series of AI-caused accidents. The ethical challenges, in these cases, are whether or not a self-driving car can make the right decision.

In the Tempe, Arizona case, an everyday situation at a crosswalk went haywire. In more extreme cases, a car would have to decide between running over a group of pedestrians or opting to swerve when the only option for movement involves hurting the driver and passengers. Here are some of the ethical challenges this industry currently faces.

The Extremes

Have you ever heard of the “trolley problem” in logics or ethics? The scenario involves a runaway train speeding down the tracks. It’s currently heading for a group od five people, but you have the option to change its direction via lever. The only problem is that changing the direction leads it towards one person. So, do you save five lives by killing one person?

It’s these kinds of scenarios that people ponder with self-driving cars. In the event that the car replaces the train, thinking on its own, which choice would the vehicle make? These are the extremes of ethics, but there’s some argument around them in the ethical community.

The issue is that extreme scenarios like these only place ethical dilemmas on catastrophic or nightmarish events. In reality, something as simple as crossing a crosswalk comes with the same level of danger and ethical dilemma even if it is in subtle ways.

Take a less-frantic or “mundane ethics” question, for instance. Let’s say your town or city has the option to create a diabetes prevention program. Should they spend money on that or use the funds to hire more social workers? The answer isn’t as simple and the consequences aren’t known in full, making this scenario more realistic yet equally as challenging.

The Crosswalk

While the life of a pedestrian at a crosswalk being taken is extreme, the ethical challenge faced is more in line mundane ethics. So does driving through an intersection or deciding if its safe to make a left turn. Drivers handle these scenarios every day with a wide variety of factors ranging from low visibility to simply being unsure if a pedestrian will cross the street.

Self-driving cars, however, don’t have the same brainpower that people do. Something like recognizing a face might be simple for humans, but incredibly challenging for today’s top machines. It’s something we have to, in a way, teach a program to do successfully.

Figuring out how to make a car ethically handle these daily challenges means creating algorithms that become the norm in autonomy. However, failing norms in algorithms are a fast track to passengers needing this Easton & Easton car accident law firm. So, proper engineering is a must.

At the same time, these algorithms need to be better than people are at driving. It isn’t uncommon for human drivers to make subpar decisions at a crosswalk based on age, race, perceived income, and other factors pertaining to pedestrians. Cars need to possess a higher level of ethics than people, but how can they when it’s people creating their AI?